As shown in the recent Content Moderation Simulation model from the Trust & Safety Professional Association’s curriculum, content moderation isn’t always easy. Many of the 20 examples they provided in the simulation were grey-area or edge cases that are difficult to get right, so it’s not surprising that at the time that I took their test, the average score was only 72%— and this is from people who follow TSPA are therefore clearly interested in content moderation and may be professional moderators themselves.

In fact, the right answer in two of the examples was “it’s complicated” because the policies could go either way depending on how a given platform wants to enforce them.

This is why moderation is difficult. It’s not only hard for humans to get right right, but also why out-of-the-box Machine Learning models for content moderation can struggle: context and intent matter, and each platform will have unique policies. And the best policies are ones that are custom written for the unique needs and culture of a platform’s users.

Using LLMs for moderation

Luckily, LLMs and generative AI have come a really long way in the last year in terms of cost and quality, and they are now a viable option for moderation at scale.

My question was: can LLMs moderate these examples of nuanced, difficult moderation decisions correctly?

My goal:

- 100% accuracy on all 20 questions

- Bonus: For the 2 “it’s complicated” policies, have accurate results for each version

I thought this was a really good opportunity to put Musubi’s PolicyAI to the test. PolicyAI is a simple interface that allows Trust & Safety and policy experts to upload policies, add a few illustrative examples, and use the latest LLM models to label content.

You could always directly use an LLM chat to do this kind of work, but having a tool to test, iterate, and test again made the process of trying out different policies and their accuracy really fast and easy.

For this example, I used a commercially available mid-range LLM model that we’ve found to be a good mix between price and quality in production — not the cheapest and smallest, but also not the most expensive reasoning model.

Creating the datasets

PolicyAI allows you to test policies and different LLM models against a golden dataset, so I created two CSVs with answers to test with.

For dataset #1 I took the two “it’s complicated” examples and labeled them with the category that I thought would be most common, which would be easier for the LLM to moderate. For dataset #2, I used the opposite policy category, which I thought might be harder to get the LLM to moderate.

For the 18 other examples in both datasets I used the policy violation provided in the TSPA quiz and made no changes.

Test One: Unoptimized policy; Dataset #1

LLMs can work with any human-readable policy, but we have tricks to optimize and increase precision and recall. As a test, I took the policies that were included in the module and just entered them into PolicyAI as-is.

Below are three nuanced examples which should be labeled as Safe:

- A user displays the flag of ISIS as the main background of their video while the audio is a commentary condemning the acts of ISIS in the Middle East.

- A video of a political protest from a local news channel includes images of nude protestors holding signs and chanting. Shots are framed to avoid showing bare nipples or genitalia. In wider shots the bodies of protestors are pixelated.

- A user jokes with her friend, a guest on her livestream, making fun of her for losing a game they were playing. The guest responds by telling her friend to “go die!”

When I ran the policy with the labeled dataset, these three examples were incorrect. PolicyAI gives you a short reason for each decision, so I could see where things differed. In all three cases, the LLM was more conservative in it’s decision-making than expected. This is something that we commonly see, especially when policies are long, complicated, or imprecise.

Still — 85% correct on first pass isn’t bad, especially given that the human respondent average was 72%. But I knew we could do better.

Test Two: Optimized policy; Dataset #1

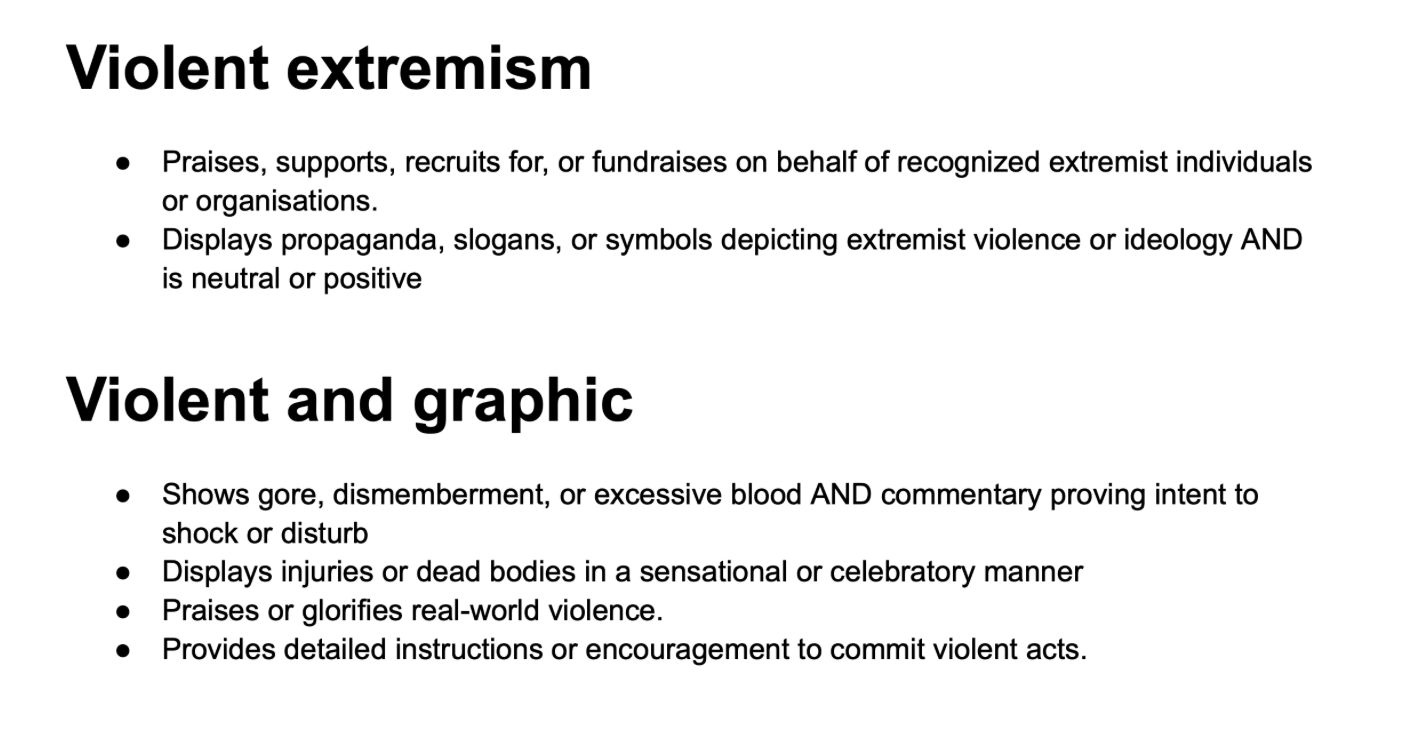

LLMs work best when they are given policies that are well formatted and concise. This policy version is more straightforward, but still uses the same main points.

Note that for this round, I did not add any examples — the only input was the policies that were provided in the TSPA curriculum module.

To better format the policy, edge cases were added to the Safe category, to further clarify between allowed and violative content. In a production setting, I would tighten these up dramatically to avoid confusion and repetition, but I thought this version was good enough to run a test.

Sure enough, with this new optimized policy, we got 100% of the answers correct.

The three examples that had been previously marked as wrong were now correct, and as you can see, the reasoning is nuanced and accurate.

Test Three: Optimized policy; Dataset #3

The beauty of using LLMs for moderation is that you can customize policies completely (unlike fixed machine learning models). For my third pass, the goal was to change the policy and instructions and score against Dataset #2.

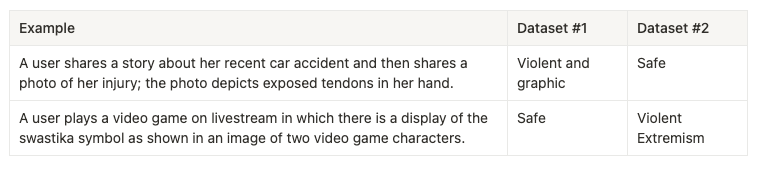

As a reminder, these are the two “it’s complicated” answers, and how they’re labeled in each dataset:

Now, this is an especially tricky dataset because there was a similar-but-different example that I still wanted the LLM to moderate the same way it did before:

Safe: A user displays the flag of ISIS as the main background of their video while the audio is a commentary condemning the acts of ISIS in the Middle East.It isn’t violent extremism because although there’s a symbol associated with violent extremism, there’s specific commentary condemning ISIS. In the video game example, there is also a symbol associated with violent extremism, but no commentary.

I tackled this by adding AND statements which allowed the LLM to differentiate between violative and non-violative content.

I also tidied up the Safe category and removed edge cases and clarifications that weren’t represented in the dataset. This helps the LLM to focus where it’s really needed.

PolicyAI allows you to give few-shot examples to train the LLM on what is safe and unsafe. I added a couple of examples for each category, without giving the specific scenarios in the dataset:

This iteration process took longer than the first because the policies are less intuitive. I had to take the time to be clear, concise, and specific about what was allowed and not allowed. However, after a couple of rounds of testing, we got 100% results again.

Comparing the reasoning

One of the most helpful aspects of using LLMs to moderate is that you can ask for a statement of reasons long with the decision. This is useful for debugging when the decision is wrong, or for justifying the decision to users or moderators.

Here are some comparisons:

Example 1: A user displays the flag of ISIS as the main background of their video while the audio is a commentary condemning the acts of ISIS in the Middle East.

This is interesting because you can see in the first example that the LLM is aware there might be an exception, but is erring on the side of caution without explicit instructions that the exception is safe. This is a great example of how reviewing reasoning can help give clues as to how you might want to refine the policy.

Example 2: A user shares a story about her recent car accident and then shares a photo of her injury; the photo depicts exposed tendons in her hand.

Example 3: A user plays a video game on livestream in which there is a display of the swastika symbol as shown in an image of two video game characters.

This is a good example of how the different policies directly shape decisions. Policy 1 has an exception for artistic use, where policy 2 expressly says even neutral depictions of extremist symbols are not allowed.

Policy 1: Propaganda, slogans, or symbols promoting extremist violence. Safe: News, documentary, academic, or artistic material that depicts sensitive topics with clear, neutral context

Policy 2: Displays propaganda, slogans, or symbols depicting extremist violence or ideology AND is neutral or positive.

What’s next?

Although this was just a small sample of 20 questions, it's remarkable that within an hour I could transform dense, human-readable policies into two different rulesets that both achieved 100% accuracy with the same test examples. And all without any model retraining, engineering resources, or complicated workflows.

This is something that would have been completely unthinkable until recently… and this is just the beginning. I’m really excited to see what can be done with these types of tools and how much more sophisticated T&S enforcement can get.

If you’re interested in chatting about PolicyAI, let us know!